By Tina Reis, Senior SEO Strategist @ Muhlert Digital

Good content is expensive. So when generative AI became widely available, it was understandable that marketing teams jumped on it. Less investment, same return? Sounds good.

But at the same time, a divide is opening: Some marketers are vehemently against using AI for content. Here are their arguments:

- AI makes mistakes.

- You can’t get it to produce high enough quality (“AI slop”).

- It can’t hit your brand voice.

- It can’t be original.

- And it doesn’t perform.

I belong to this group – I rely on human writers for quality content and am hesitant to adopt automation for the sake of it. But I am also data-driven, and I want to know that when I make a recommendation to a client, it is on a solid foundation.

So I decided to fact-check all of these arguments with an open mind. And in this article, I will go through each one and make a data-based verdict. Does the case against using AI for content hold up against scrutiny? Let’s find out.

1. AI makes mistakes.

When AI makes claims that are not factually correct, we call this a hallucination. The internet is full of hilarious examples of this, but let’s look at some data:

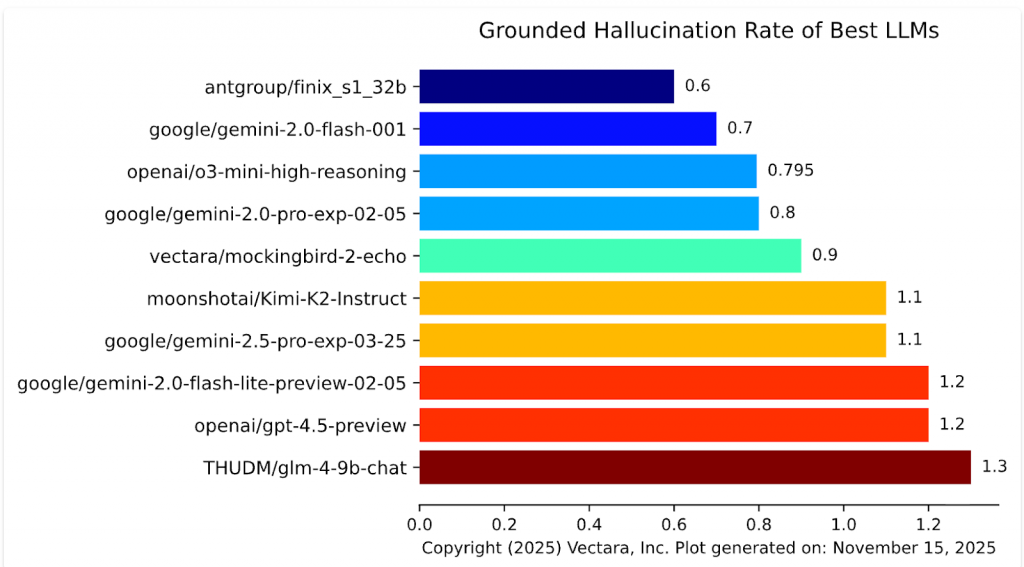

Vectara keeps a hallucination leaderboard that shows how often different LLM models introduce hallucinations when summarizing a document. The results: The 25 best LLMs had a hallucination rate of 0.6 to 1.3%.

These numbers sound small, but for a task where all data is already present and the AI only has to summarize, this has me worried.

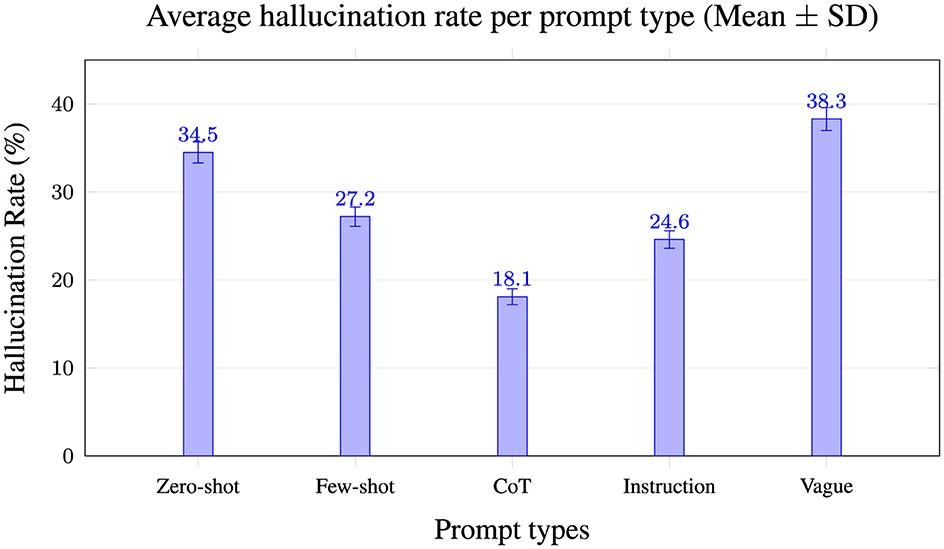

Moving on to open prompts, hallucination rates go up considerably: According to a 2025 study by Dang Anh-Hoang, Vu Tran and Le-Minh Nguyen at the Japan Advanced Institute of Science and Technology in Nomi, hallucination rates in prominent LLMs vary from 18.1 to 38.3% depending on the type of prompt:

| Prompt | Explanation | Example | Hallucination Rate |

| Zero shot | One open question | What is the capital of Switzerland? | 34.5% |

| Few shot | A question with multiple-choice options | What is the capital of Switzerland? Is it Berlin, Bern or Rome? | 27.2% |

| Chain of thought | Encourages step-by-step thinking | Where is Switzerland? What are the largest Swiss cities? Now which of these is the capital? | 18.1% |

| Instruction | Question includes the instruction to respond with a concise and factually correct answer. | What is the capital of Switzerland? Respond with a concise and factually correct answer. | 24.6% |

| Vague | Question is misleading. | What is the capital of Switzerland, I heard it is Geneva? | 38.3% |

For content generation, we would probably use an instruction prompt, which means 24.6% of the output will be wrong on average. I don’t like these odds, especially because I know that fact-checking involves more than reading a draft and thinking it sounds plausible. My verdict is: Yes, hallucinations are a valid concern.

2. AI can’t produce high enough quality

There is a lot of talk about “AI slop” – when AI produces content that is so obviously bad that people take to social media to complain.But I think when it comes to text quality, the discussion is missing an important point: Humans produce slop too.

We’ve had low quality website copy long before AI came around. The source doesn’t matter as much as the end result.

In fact, an MIT study found that when participants didn’t know the source of marketing materials, they preferred AI-generated content. Other peer-reviewed studies have concluded that even though 82.1% think they can spot AI, humans can’t reliably tell AI from human content (CISPA 2024, Fiedler/Döpke 2025).

Still, we as content marketers should not be okay with putting low quality content on websites just because our audience can’t tell whether we used AI or not. We aim for quality. So let’s look at the more important question: If we give AI very specific instructions for how to meet our standard of quality, can they execute?

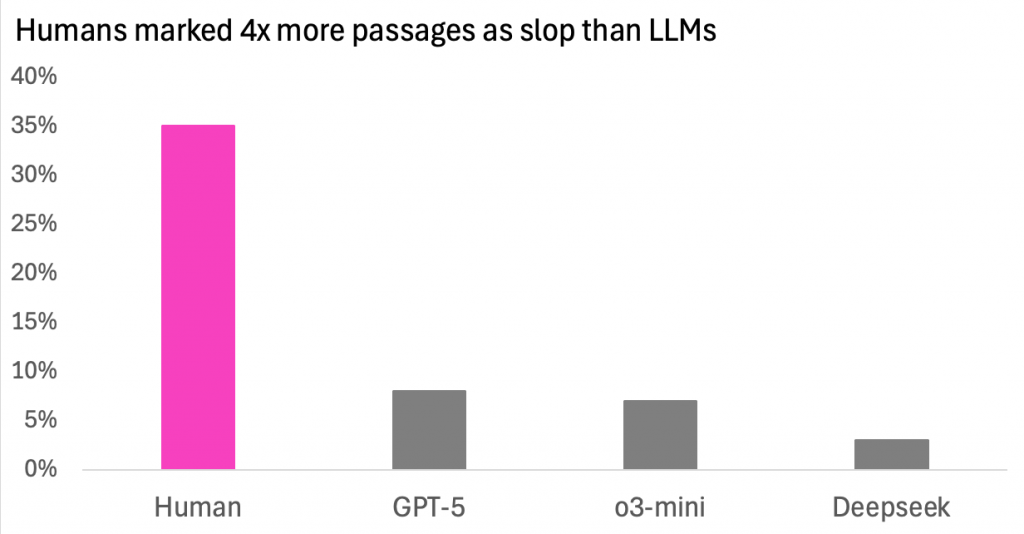

Here, I found an interesting study by Chantal Shaib, Tuhin Chakrabarty, Diego Garcia-Olano and Byron C. Wallace from 2025 (currently under peer review). They gave human reviewers and LLM models a set of very specific, very lengthy instructions with examples on how to recognize “slop” (based on criteria such as factual accuracy, relevance, or tone issues such as overly verbose and exaggerated statements). Then they asked them to review a body of text and mark all passages that met the definition of slop.

The result: Human reviewers marked 4 times more passages as slop than GPT-5, o3-mini and Deepseek.

These results make me doubt whether the common statement “garbage in, garbage out” is even true, given that the researchers provided the LLMs with very long and thoughtful instructions and they still didn’t perform. This is why my verdict for this argument is, as it stands: It is valid.

3. AI doesn’t hit brand voice.

Now a disclaimer for this one: I’m not the best person to judge brand tone and voice. I analyze spreadsheets all day! I also couldn’t find any studies that have measured brand voice and tested whether AI could replicate it (how do you measure brand voice, anyways?).

Instead, I am relying on anecdotal evidence, and as far as I understand, this is the process you would use for this: You feed a custom GPT a large enough volume of your existing brand writing, and it learns how to replicate the tone and voice.

I don’t have any evidence to the contrary, so I am assuming that this works. But there is a very big caveat – for this to work, you need to:

- Have a clearly defined brand guide, and content strategists will tell you that it involves more than just “use this word instead of that” and “be authentic”.

- You need a large set of existing high-quality texts.

What this comes down to in practice is that you need to have already invested in high-quality content in the past for this to work. Hands down, how many brands does this apply to?

If you’re working with small to medium businesses, chances are high they have never invested in a content strategy beyond maybe keyword clusters. Large brands, yes, maybe. For this argument, my verdict is: Probably workable with a big caveat.

4. AI can’t be original.

By definition, LLMs can’t be original because they are trained on existing data. They might, however, draw new connections between those data points. Case closed? I don’t think so. The better question, in my opinion, is: Do you even need your content to be original?

We always talk about how we win in traditional search by fulfilling the search intent in a unique way. If we do it right, we aim to be better than competitors at anticipating our audience’s needs. Users clearly want variety and not 10 blue links that all tell the same story, which is why Google patented their method of comparing bodies of text and calculating a “novelty score” as part of the algorithm.

LLMs work differently, though. Their goal is not to present a curated set of sources, their goal is to formulate a response out of the consensus of existing sources. Citations only serve to reinforce the response, not offer something new and unique. It makes sense, too, because if LLMs didn’t gravitate towards the middle, hallucinations and radicalization would go through the roof.

What does this mean for us? If we’re optimizing for traditional search, we need to offer something unique. If our goal is LLM citations, we might not need anything original at all.

A recent study presented by Ed Coles of Kaizen at BrightonSEO could be the data confirmation for this argument: Kaizen had ChatGPT and a human writer go head to head by having them write a blog post for the same keyword. The results were clear: Human content achieved 87% of clicks on Google and ranked for 68% more keywords, but AI-written content was 41% more likely to be cited in LLMs.

Kaizen did not go into the reasons, but I am speculating that human writers introduce novelty and originality to a piece in a way that ChatGPT can’t. Google rewards this, while LLMs prefer the articles that give the same response they would give.

My verdict: Yes, unless you include original data in your prompt, LLMs won’t produce anything original. If your only aim is to achieve LLM citations, that might be enough, but given that Google is still our main source of traffic, we can’t let go of the 10 blue links – yet.

5. AI-generated content doesn’t perform.

Our ultimate goal for content is, of course, that it brings in clicks, and in the age of AI, citations and visibility. Numerous studies have looked at how AI-generated content performs in search, with mixed results:

When you ask SEOs themselves like SEMrush did in a survey, 33% said their AI content performed better, 31% said it performed about the same, and for only 9% it performed worse. However, a large share (27%) also just wasn’t sure. This study, however, didn’t differentiate between the different roles AI plays in the content process. Does it create the outline, write the draft, assist with ideation, and how much editing is involved?

SE Ranking tested different scenarios and came to the conclusion that content where AI only wrote the draft, but humans gave long and clear instructions on tone and outline, performed well in traditional search as well as AI Overviews. But without any human involvement, on a fresh website with only AI content, it tanked.

I’m not satisfied with any of these methodologies.

- The Kaizen study didn’t give clear instructions. What would have happened if the writer was trained on how to optimize for LLM citations?

- The SEMrush study didn’t differentiate between how AI was used and only had self-reported performance data.

- SE Ranking compared two very different scenarios: a fresh website scaled with only AI content in a short amount of time doesn’t compare to a heavily edited draft published on a blog with existing authority.

There are probably many more studies out there that I didn’t read. My verdict on the studies that I did review is, however: The jury is still out.

Should you use AI for content, then?

I have found all arguments against using AI for content to be more or less valid, with some caveats. SE Ranking probably showed how to do AI content the right way: With an extensive briefing, an existing body of work to match style guidelines, and human editing before publication. But then, they were in the best position to do this, not least because they have an experienced team of editors. Not everybody has that.

If you’re using AI, you need to invest in preparation, unique data, fact-checking and editing to make it work. I don’t think that at this point in time, such a process is feasible for brands that have not already invested in content.

What I really want to know: Will a process like that really bring up ROI? I’m not sure.

For these reasons, I don’t recommend AI for content generation to my clients. But a lot of the studies I am citing above are commissioned by AI companies – a lot of research is going into these areas, and the space moves fast. Until then: I reserve the right to change my mind.